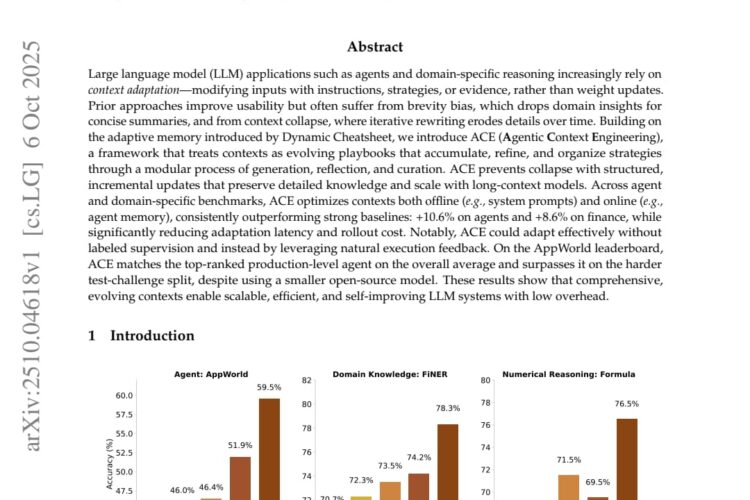

A New Research Paper That May Stay With Us for a Long Time: AI Doesn’t Need Fine-Tuning to Get Smarter; It Needs Something Else

Stanford has released a new framework called ACE (Agentic Context Engineering), which shifts the core of model improvement from retraining to self-evolving context engineering!

How it works:

✴️ The model is not retrained; instead, it evolves its context to become a living strategy guide.

✴️ The model writes, reviews, and corrects its own prompts based on real-world execution outcomes.

✴️ Every failure becomes a crucial rule, and every success becomes an added strategy in the context.

Why this shift matters 🤔

✴️ ACE outperforms GPT-4-based agents by 10.6% on the AppWorld benchmark and achieves an 8.6% gain in complex financial reasoning tasks.

✴️ It delivers incredible efficiency with 86.9% lower cost and response time compared to previous adaptive methods.

The end of an era.

💡 While everyone is heading towards short, clean prompts, ACE does the exact opposite—building detailed, long prompt guides that accumulate knowledge. This proves that large language models (LLMs) don’t seek simplicity, but rather contextual density to handle complex tasks.

Research Paper Link 🔗 👇